Apple has altered its planned new system for detecting child sex abuse images after a week of criticism due to privacy concerns.

The tech giant has said the system will now only hunt for images that have been flagged by clearinghouses in multiple countries.

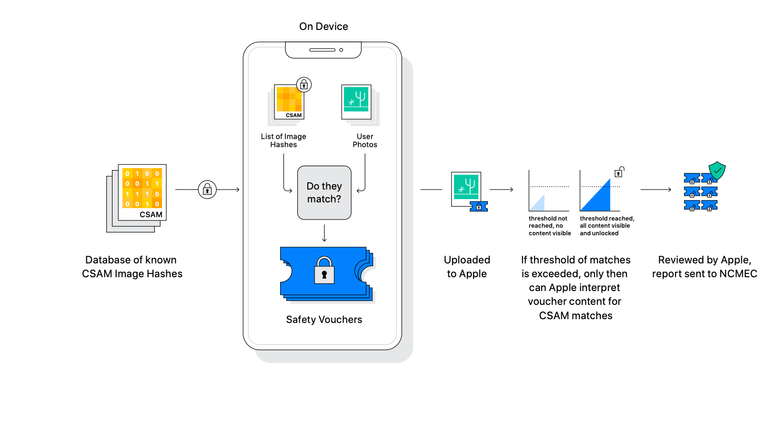

Earlier this week, Apple announced a planned feature for iPhones that would automatically scan the device to see if it contained photos featuring child sexual abuse before they were uploaded to iCloud.

If a match was found Apple said it would report the image to the US National Centre for Missing and Exploited Children (NCMEC).

The announcement sparked a backlash from influential tech policy groups and even its own employees who were concerned that the company was jeopardising its reputation for protecting consumer privacy.

Apple had declined to say how many matched images on a phone or a computer it would take before the operating system notifies them for a human review and possible reporting to authorities.

But executives said on Friday it would start with 30, though the number could become lower over time as the system improves.

Apple also said it would be easy for researchers to make sure that the list of image identifiers being sought on one iPhone was the same as the lists on all other phones, seeking to blunt concerns that the new mechanism could be used to target individuals.

The alteration was detailed at a fourth background briefing since the plan to monitor customer devices was first announced just over a week ago.

Apple declined to say whether the criticism it had received had changed any of the policies or software, but said that the project was still in development and changes were to be expected.

Asked why it had only announced that the US National Center for Missing and Exploited Children would be a supplier of flagged image identifiers when at least one other clearinghouse would need to have separately flagged the same picture, an Apple executive said that the company had only finalized its deal with NCMEC.

The rolling series of explanations, each giving more details that make the plan seem less hostile to privacy, convinced some of the company’s critics that their voices were forcing real change.

Riana Pfefferkorn, an encryption and surveillance researcher at Stanford University, tweeted: “Our pushing is having an effect.”

Apple said last week that it will check photos if they are about to be stored on the iCloud online service, adding later that it would begin with just the United States.

Other technology companies perform similar checks once photos are uploaded to their servers.

Apple’s decision to put key aspects of the system on the phone itself prompted concerns that governments could force Apple to expand the system for other uses, such as scanning for prohibited political imagery.