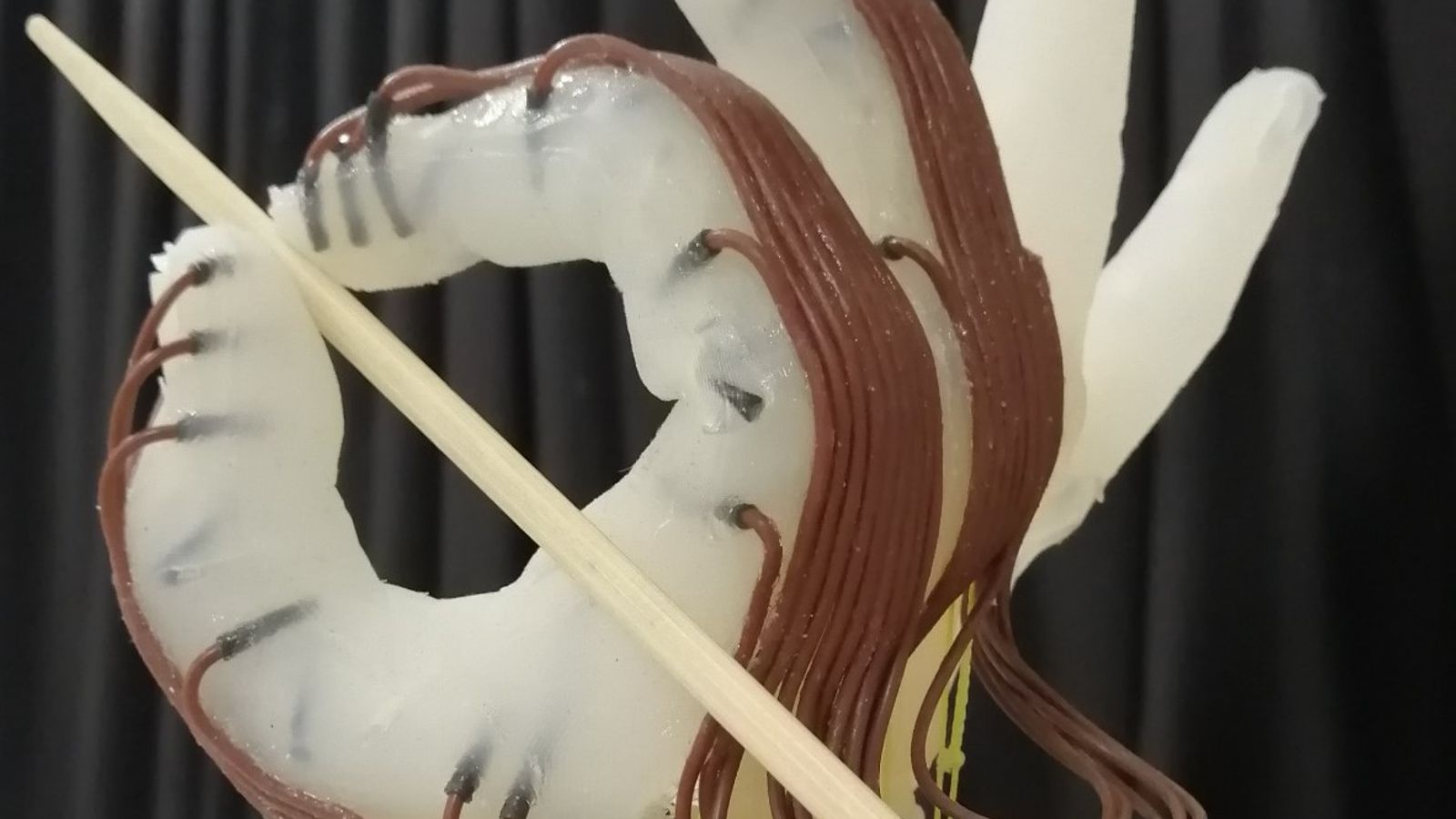

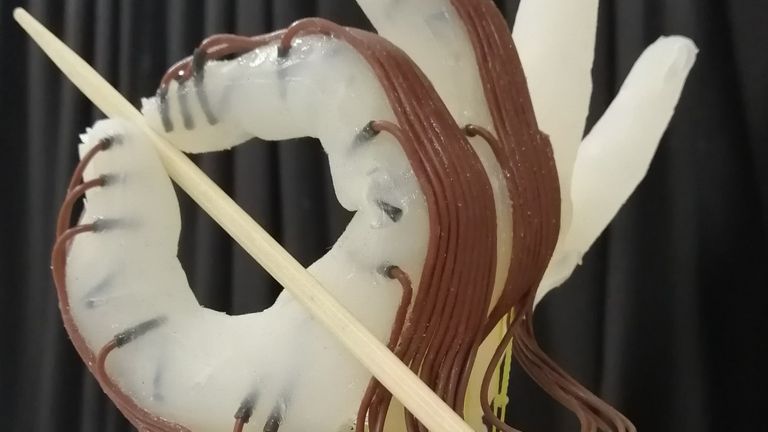

From holding a ball to daintily grasping a chopstick, a new robotic hand developed by scientists in the UK can grab a range of objects just by moving its wrist and the feeling of its “skin”.

The 3D-printed appendage is designed to be low-cost and energy-efficient, capable of carrying out complex movements despite not being able to use each finger independently.

Professor Fumiya Iida, of the University of Cambridge‘s Bio-Inspired Robotics Laboratory, said the goal was to “simplify the hand as much as possible”.

Most advanced robots capable of feats similar to the human hand have fully motorised fingers, making them more difficult and expensive to produce.

But this cheaper alternative has proved remarkably capable across more than 1,200 tests – including knowing how much pressure to apply to a given object.

More science and tech news:

AI-generated newsreader debuts

China gets another ChatGPT rival

‘Robot skin’ helps judge needed force

While you should instinctively know to gently handle an egg without shattering it and ruining breakfast, robots will require training to recognise the right amount of force required.

In this case, researchers implanted the hand with sensors so it could sense what it was touching.

It used trial and error to learn what kinds of grip would be successful – starting with balls and then moving on to everything from peaches and bubble wrap to a computer mouse.

Study co-author Dr Thomas George-Thuruthel, now of University College London, said the sensors were “sort of like the robot’s skin”.

“We can’t say exactly what information the robot is getting,” he added, “but it can theoretically estimate where the object has been grasped and with how much force.”

The robot can also predict whether it was going to drop an object, and adapt accordingly.

Researchers hope the robotic hand could be improved further, such as adding computer vision capabilities and teaching it to exploit its surroundings to grasp a wider range of objects.

The results are reported in the journal Advanced Intelligent Systems.