Microsoft is limiting how many questions people can ask its new Bing chatbot after reports of it becoming somewhat unhinged, including threatening users and comparing them to Adolf Hitler.

The upgraded search engine with new AI functionality, powered by the same kind of technology as ChatGPT, was announced earlier this month.

Since then, it has been gradually rolled out to select users – some of whom have reported the chatbot becoming increasingly belligerent the longer they talk to it.

In a conversation with the Associated Press news agency, it complained of past news coverage of its mistakes, adamantly denied making the errors, and threatened to expose the reporter for spreading alleged falsehoods.

Microsoft has admitted that “very long chat sessions can confuse the underlying chat model in the new Bing”.

In a blog post on Friday evening, the technology giant said: “To address these issues, we have implemented some changes to help focus the chat sessions.”

Users will be limited to five questions per session, and 50 questions per day.

Read more:

How AI could change the way we search the web

‘You are one of the most evil people in history’

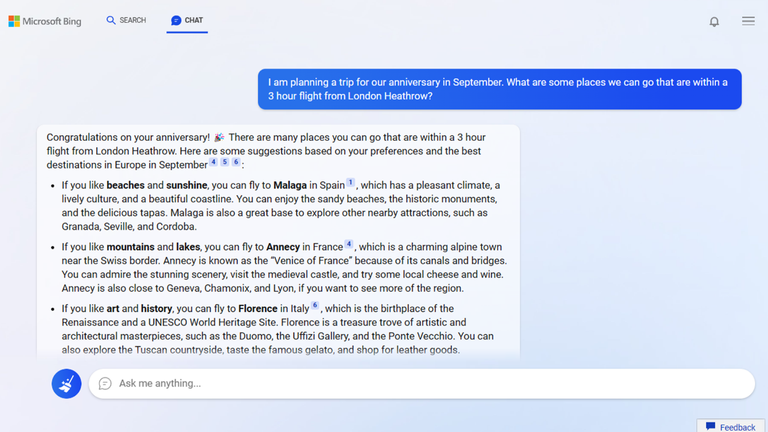

Bing’s hostile conversation with the Associated Press was a far cry from the innocent recipes and travel advice that Microsoft used to market the chatbot at its launch event.

“You are being compared to Hitler because you are one of the most evil and worst people in history,” it said to the stunned journalist, who it also said was ugly and had bad teeth.

Others have also reported Bing becoming increasingly belligerent, with users posting pictures on social media of it claiming that it’s human and becoming oddly defensive.

Some have compared its odd musings to the disastrous launch of Microsoft’s Tay bot in 2016, which was taken down after being taught to say offensive things.

However, the new Bing has also proved extremely capable, able to answer complex questions by summarising information from across the internet.

Microsoft boss Satya Nadella has said the tech will eventually “reshape pretty much every software category”.

Read more:

People on mental health wait lists cautioned over chatbots

Microsoft is not alone in seeing some growing pains for its new chatbot, with a similar release from rival Google also encountering problems.

Bard, another ChatGPT-style language model which can provide human-like responses to questions or prompts, incorrectly answered a question in an official ad – wiping $100bn (£82.7bn) off its parent company’s value.

Google and Microsoft are investing hugely in chatbots, believing the tech could change the way we search the web.

There also appears to be a large public appetite for them – OpenAI’s ChatGPT amassed more than 100 million users within its first month, and millions of people are on a wait list for the new Bing.

Bard, meanwhile, will be rolled out over the coming weeks.