Twitter is introducing a new feature on a trial basis to test the ability for users to report misleading posts.

The move follows a wave of criticism of social media companies for facilitating the spread of misinformation about the COVID-19 pandemic.

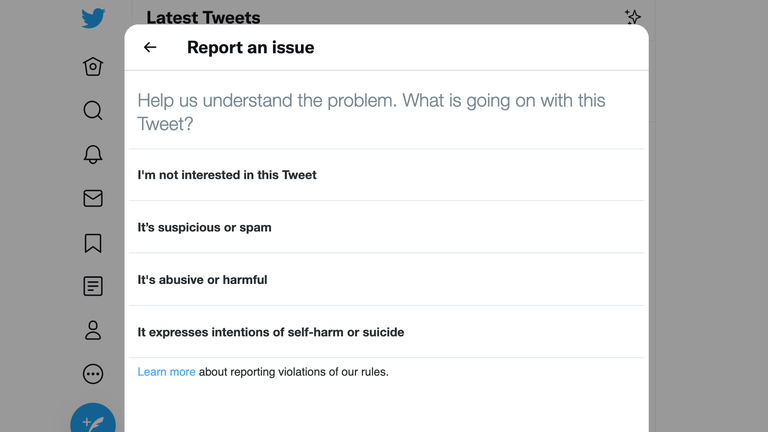

Although Twitter currently allows users to report posts, the subsequent pop-up doesn’t contain a field allowing users to report posts for misinformation.

The company says the test feature will be available to some users in Australia, South Korea and the United States from this week.

It will add an “It’s misleading” option to the fields that appear when users attempt to report posts.

“We’re assessing if this is an effective approach so we’re starting small,” the company’s safety account explained.

“We may not take action on and cannot respond to each report in the experiment, but your input will help us identify trends so that we can improve the speed and scale of our broader misinformation work.”

Last year the company introduced a warning when users attempted to like a tweet which had been flagged as misleading.

The move came as the company attempted to address unsubstantiated claims from Donald Trump about the integrity of the 2020 US election.

Twitter had previously announced a crackdown on anyone posting “misleading” information about COVID-19 vaccinations.

Efforts by social media platforms to address misleading content have regularly provoked arguments that these moderation efforts are politically motivated.

Social media platforms are currently protected by a law passed in 1996, which means in most circumstances they are not liable for the content of their users’ posts because they are a neutral platform rather than a publisher.

However, Section 230 of the Communications Decency Act allows them to perform “good faith” content moderation – as a publisher would – without assuming the liability which publishers have.

But instances of this “good faith” moderation targeting then President Trump – especially Twitter fact-checking two of his tweets which falsely claimed postal votes were fraudulent, and hiding another which the company said glorified violence – ignited a row about this immunity.

Mr Trump, who persistently accused both traditional and social media of being biased against him, complained that social media platforms “totally silence conservative voices”.

He promised to “close them down before we can ever allow this to happen”, and subsequently signed an executive order calling on federal agencies to review Section 230.

The Department of Justice led by Attorney General William Barr unveiled its proposals for reform following that review ahead of the election of President Biden.

Although there is bipartisan agreement that the law needs to be updated and reformed, there is as yet no agreement about what an updated and reformed version of it should look like.