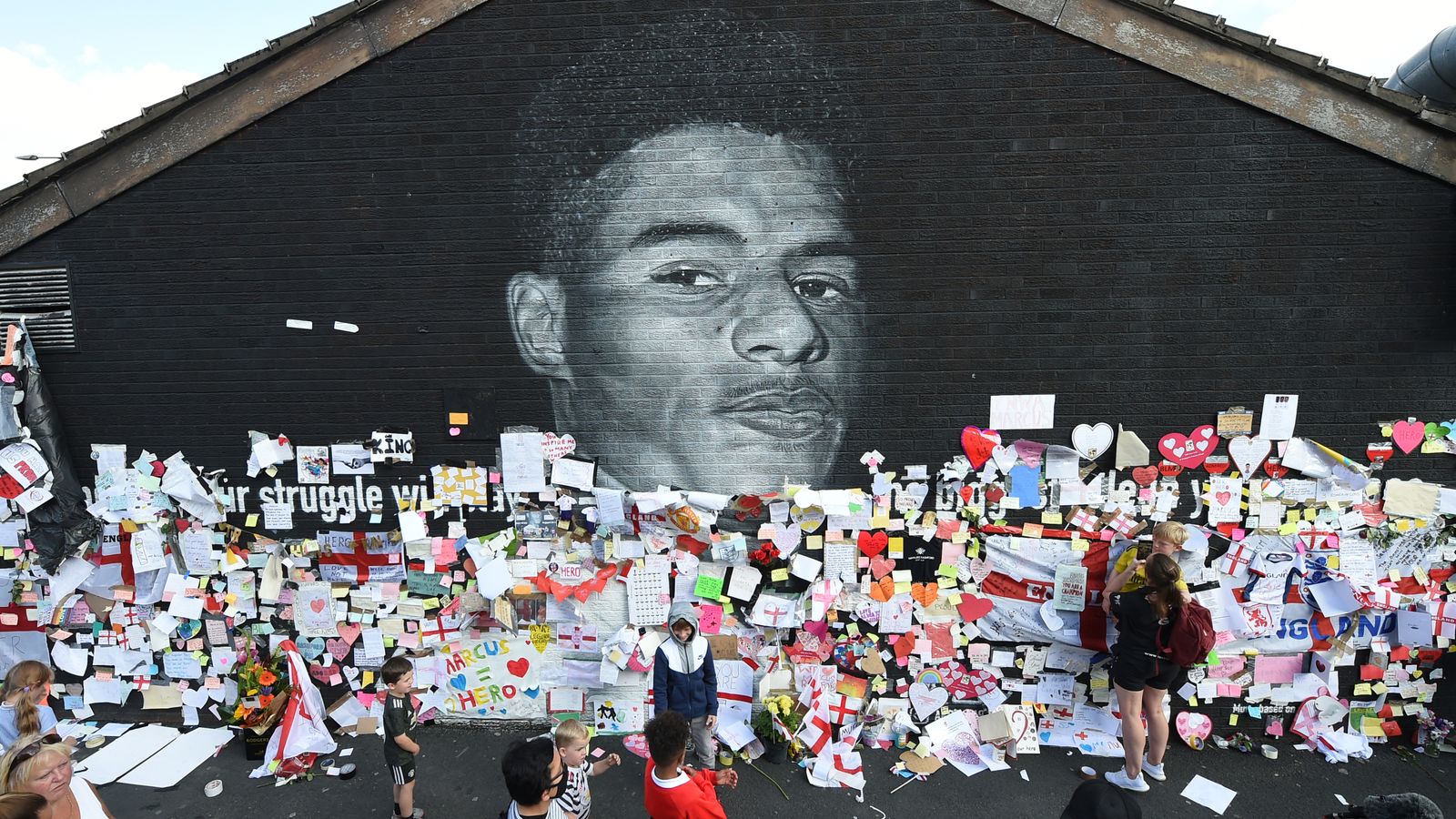

Sky News has analysed 99 accounts involved in racially abusing England players Bukayo Saka, Jadon Sancho and Marcus Rashford on social media.

The accounts, which were identified by the campaign group Center for Countering Digital Hate (CCDH), were responsible for over 100 racist comments on the players’ Instagram posts.

106 accounts were reported to the social media platform by CCDH but three days later, just seven had been removed.

This is despite the comments violating Instagram’s community guidelines.

We found that only three of the still-active accounts appeared to be based in the UK, with one seemingly run by someone of primary school age.

Over a quarter of the comments were sent from anonymous private accounts with no posts of their own.

But identifying perpetrators of online hate is just one part of the problem.

Ensuring that hateful content is removed from platforms presents its own unique challenges, according to Professor Matthew Williams, author of The Science of Hate, and professor at HateLab, Cardiff University

And campaigners say that the government must work with social media companies on both issues to stop platforms “giving a megaphone to racism, abuse and hate”.

One of the first things we wanted to establish is which of the accounts involved were being operated by people in the UK.

Even this is difficult. It’s hard to know whether a user is who they say they are.

It’s impossible to discover through open-source techniques that an account is being operated from a particular country.

There are, however, some clues that can help.

A native English speaker, for example, might structure sentences in a different way to someone for whom English is a second language, or use particular spellings or slang.

Other markers are the type of accounts the user follows and interacts with, and the times at which the user is posting.

Based on these things, we believe that just three of the accounts still active at the time of our analysis were operated by people living in the UK.

Two of these appeared to be run by men in their twenties.

The other appeared to be run by someone of primary school age.

A closer look at one of the removed accounts that appeared to be run from the UK revealed that, despite being taken down, a second private account using the same picture and an almost identical username is still in operation.

The second profile, which has existed since at least April 2021, even posted racist comments on a post from another one of the profiles that was flagged by CCDH in the days after the game.

The comments were left under a picture watermarked with the logo of a European white supremacist organisation.

This user regularly shares content promoting the white genocide conspiracy theory known as “The Great Replacement”.

But the profile picture on this account is of a young girl.

A reverse search of the image generates a link to a male’s account on Russian social media site VK, although the connection between the image and the account is unclear.

These two examples illustrate just how easy it is for users to circumvent the restrictions put in place by social media platforms to spread hate.

Other users may anonymise their existing accounts so that the comments they post are not traceable to them in the offline world.

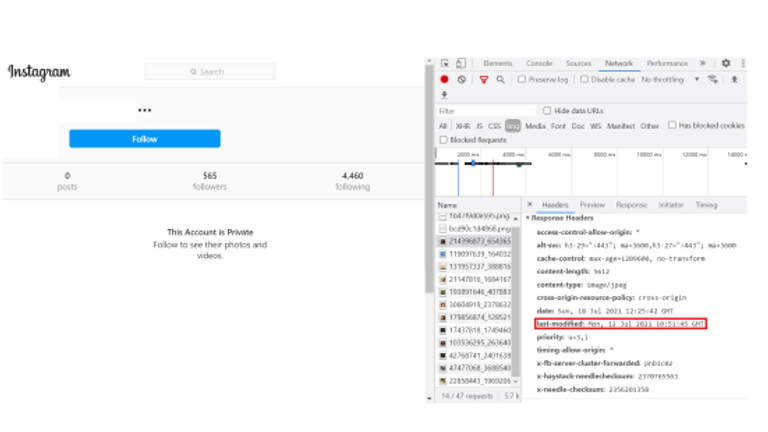

According to the metadata of their profile images, three of the accounts we looked at had last modified their picture in the hours after the final.

That could either mean that the account’s picture was changed at that time, or that the account was created at that time.

Whether the profiles were created specifically to post hateful messages, were changed in some way to conceal the identity of the person spreading the abuse, or it was nothing more than a simple coincidence, is unknown.

But it reveals just how easy it is to become faceless online and how challenging it can be to trace who is really behind these profiles.

We found that over a quarter of the comments flagged by CCDH came from private profiles with zero posts.

Many of these also had zero followers, which is common across accounts used specifically for trolling.

Some accounts, however, appear to be the personal profiles of users who seem to have made no attempt to conceal their identity.

These include that of a Russian filmmaker, who regularly shares professionally-taken snaps from various film shoots around Moscow.

CCDH picked up on the account after it left racist and homophobic slurs in comments on one of Jadon Sancho’s posts.

Other comments came from the personal account of a bodyguard to Azeri members of parliament. Another came from the business account of a welding company based in Tehran.

Many of the accounts we analysed appeared to be run by fans of English football teams.

One of the users that commented abuse on a post by Jadon Sancho has a separate social media account which has “Everton” in the username.

We also found profiles that present themselves as fans of Manchester United and Liverpool FC among the 106 flagged by CCDH.

Most of these appeared to be people living outside of the UK.

Subscribe to the All Out Politics podcast on Apple Podcasts, Google Podcasts or Spotify.

HateLab’s Professor Williams says that while social media companies and police can identify perpetrators, it doesn’t often happen.

“The issue is platforms have been reluctant to help in investigations, and largely refuse to cooperate when content does not reach a criminal threshold, which includes many of the posts sent to football players in recent months.”

“But even when account holders have been identified, little can be done by the UK authorities to punish the offender if they are based abroad.”

When it comes to detecting and removing hateful content, it’s even more complicated.

“The AI used to automatically detect harmful content is currently not up to scratch, meaning it still finds large amounts of false positives (content classified as hateful when it is not) and false negatives (content not identified as hateful when it is),” he told Sky News.

“This means some non-hateful content may get censored, creating risks to freedom of expression and the rights of the poster, and some hateful content is missed, resulting in possible harm to the victim or community.”

Prof Williams also highlighted the unique challenge that emojis present to social media platforms, because they can be used in both positive and hateful ways, depending on the context.

Sky News found that just 5% of posts we analysed that used racist emojis were linked to accounts that were later removed by Instagram.

This is compared to 17% of the posts that used racist language.

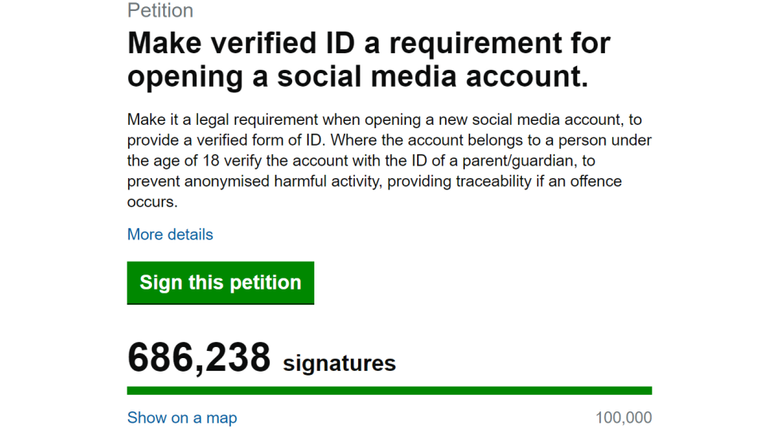

A petition to make verified ID a requirement for opening a social media account has gained over 500,000 signatures since the Euro 2020 final.

The petition was initially set up in May by reality star Katie Price as a response to the perceived limitations of the government’s Online Safety Bill in protecting users from hateful speech.

It gained momentum this week after a wave of collective anger at the racism directed at Saka, Sancho and Rashford swept the country.

Prime Minister Boris Johnson said on Wednesday that the government is working to ensure that Football Banning Orders are extended to include online racism.

That followed a meeting between the government and social media giants Facebook, Twitter and TikTok on Tuesday in which the prime minister said he told them that the Online Safety Bill will legislate to address this problem.

The bill proposes that social media companies should face fines of up to 10% of their global revenue if they fail to remove racism and other hate speech from their platforms.

But some stress that the bill won’t stop hateful and racist messages like the kind seen after Sunday’s match from appearing online.

“The bill will not force social media platforms to prevent the posting of hateful content, and will only require them to remove it in a timely fashion,” said Prof Williams.

“So, if a hate post is directed at a person the chances are they will see it before it is removed, and the damage will be done.

“Much of what the bill proposes in terms of the swift removal of illegal content is already covered by the EU Code of conduct on countering illegal hate speech online, to which most of the big social media companies have already signed up to, so in reality it is doubtful much will change.”

The Data and Forensics team is a multi-skilled unit dedicated to providing transparent journalism from Sky News. We gather, analyse and visualise data to tell data-driven stories. We combine traditional reporting skills with advanced analysis of satellite images, social media and other open source information. Through multimedia storytelling we aim to better explain the world while also showing how our journalism is done.

Why data journalism matters to Sky News